Jun 25, 2024

Gautam Thapar

Introduction

Every year, teachers in the U.S. spend over 400 million hours grading student work. As this task grows, many educators are understandably turning to AI solutions like ChatGPT and EnlightenAI to streamline the process. However, with the significant impact that grading and feedback have on student learning, it's crucial to choose a tool you can trust. Recent research showed that ChatGPT, especially earlier, cheaper models that many AI grading tools are built atop, is not ready for use for grading.

At EnlightenAI, we understand these concerns, which is why we set out to rigorously test the accuracy and reliability of our AI grading assistant against both seasoned human graders and other AI technologies. We'll be releasing a white paper in the next few weeks sharing more detail on the findings we preview here.

The study setup

In our study, we selected 437 student work samples that had previously been evaluated by educators at DREAM Charter Schools in New York. To explore what the grading might look like if DREAM had used EnlightenAI from the start, we simulated this scenario. We input the context of each assignment into EnlightenAI, graded five papers, and then generated scores and feedback for the remaining. The whole grading process took less than an hour, and was done using the exact same technology we offer to our users for free.

The Result: EnlightenAI met or exceeded the accuracy benchmarks for well-trained human scorers

Yes, you read that right. Researchers have taken a team of human scorers, put them through a 3-hour calibration training on scoring essays using a holistic rubric, and then measured their consistency with one another.

EnlightenAI met or beat these human benchmarks, while exceeding ChatGPT’s performance by a wide margin. For the first time, ever, teachers and school leaders have access to a personalized scoring and feedback tool that competes with well-calibrated human graders, as well as state-of-the-art automated essay scoring tools.

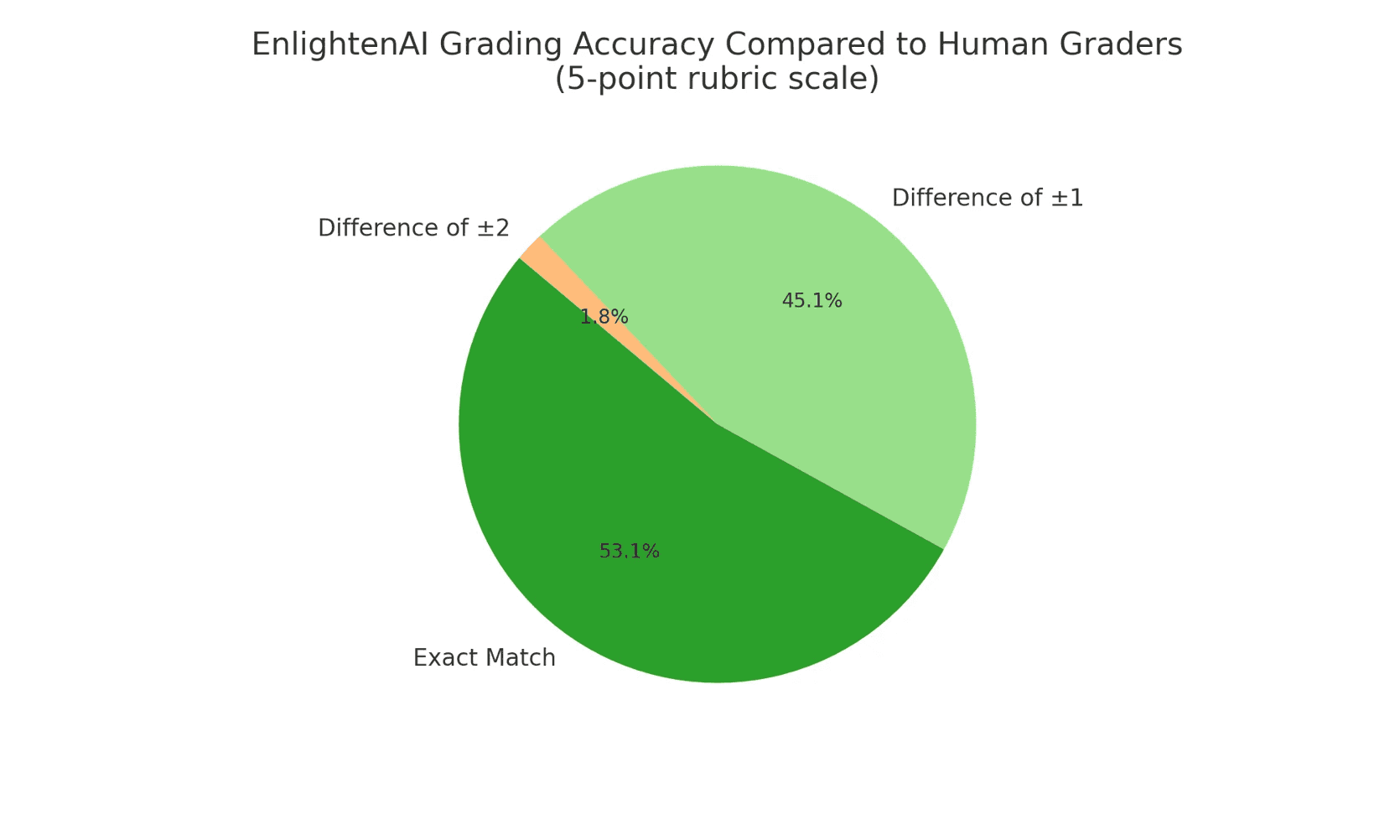

How often did EnlightenAI give the exact same score as DREAM graders?

How effective was EnlightenAI at producing a ‘perfect match’ with its human scorers? In assessments using a 5-point New York State constructed response rubric (scoring range 0 to 4), EnlightenAI matched the exact score assigned by DREAM educators in 53% of cases. Comparatively, in studies using a 6-point rubric (scoring range 1 to 6), well-trained human graders—after receiving 3 hours of training and undergoing continuous monitoring—agreed on the exact same score 51% of the time. ChatGPT performed worse than both, matching human scorers between 20-42% of the time on the same 6-point rubric mentioned above. You can view the distribution of errors by EnlightenAI below. An error of 0 equates to a perfect match, while errors of +1 or -1 signal that EnlightenAI missed the mark by one point in either direction.

How often did EnlightenAI assign scores within 1 point of DREAM graders?

Grading is an imperfect science, and on many rubrics score differences of a single point are largely subjective. A highly reliable and consistent grading system not only produces exact matches with calibrated human scorers, but also minimizes the size of the error when it fails to produce an exact match. On this measure, EnlightenAI really shines.

EnlightenAI assigned scores within one point of the human scores in 98% of assessments. It never missed by more than 2 points in a sample of 437 papers. Notably, this is an area where human scoring consistency lags behind. Well-trained human graders assign scores within 1 point of each other just 74% of the time. Interestingly, ChatGPT outperforms humans in this regard, assigning scores within one point of human scorers 76-89% of the time, but varies widely depending on the samples of student work and the task assigned. On these measures, EnlightenAI outperformed both humans and ChatGPT.

How can we compare EnlightenAI directly to other benchmarks for grading accuracy?

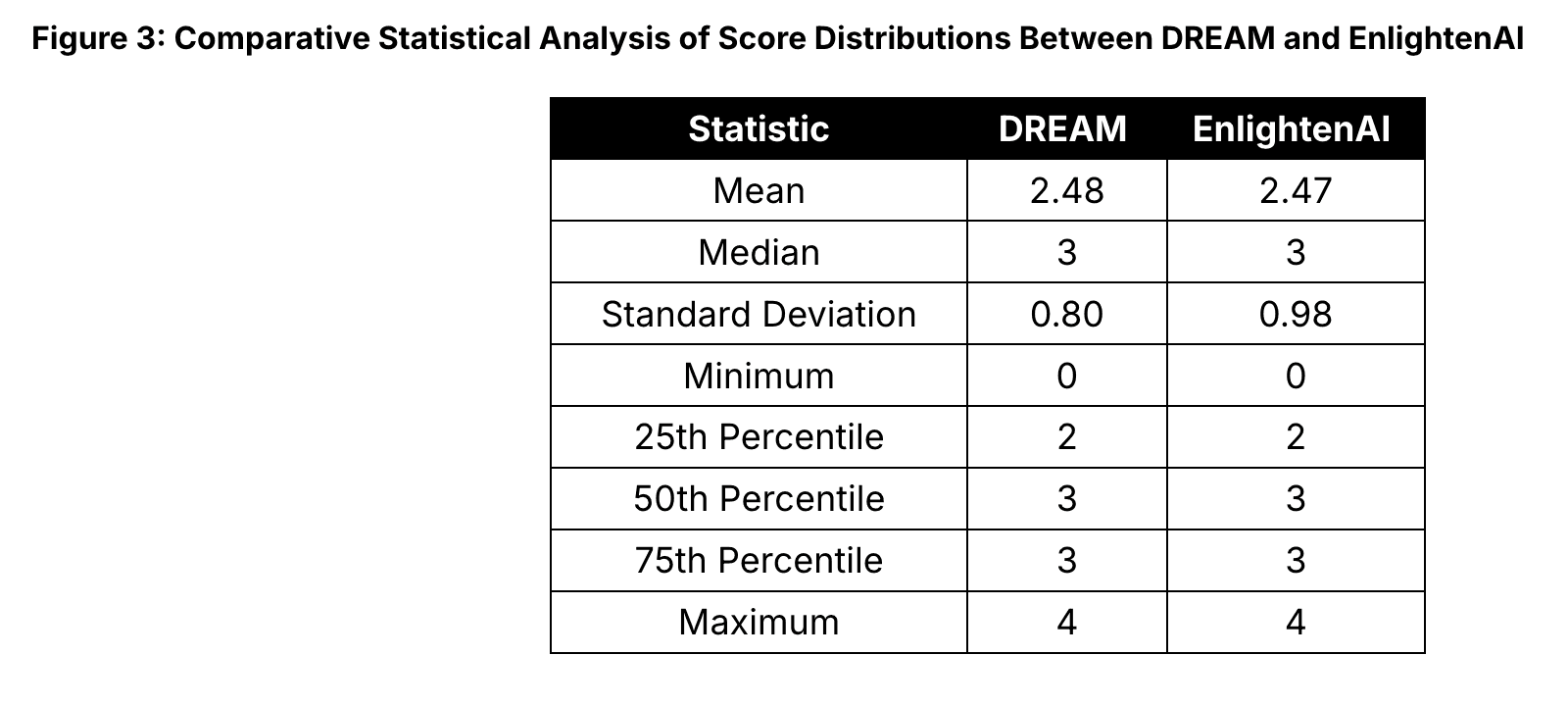

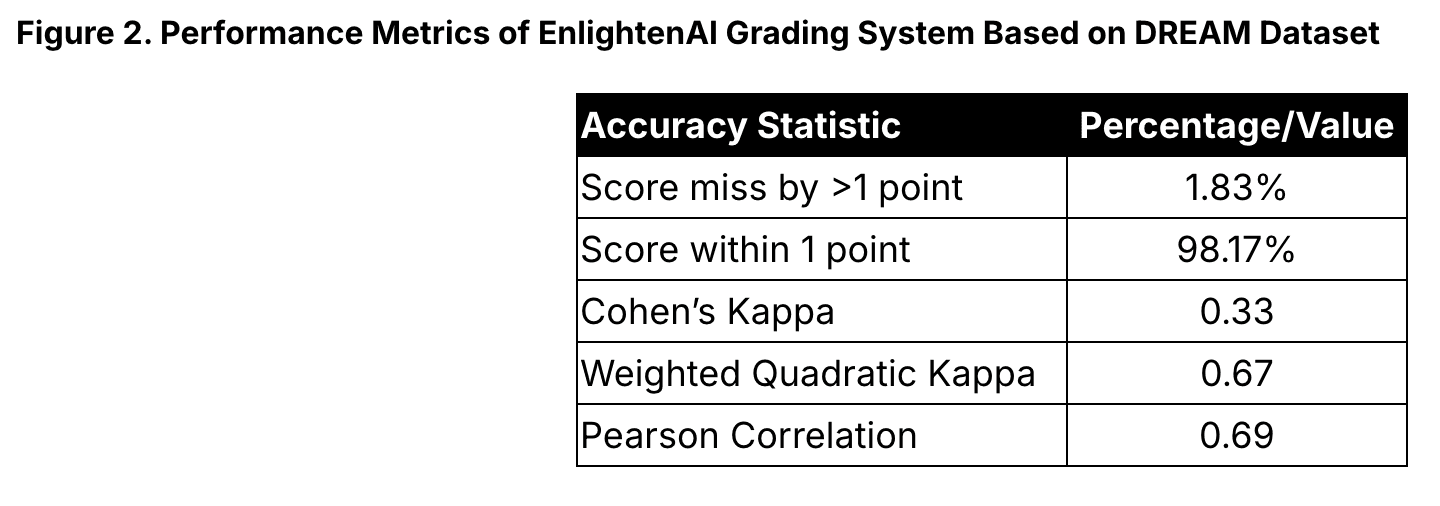

You might have noticed that EnlightenAI’s collaborative study with DREAM was done using a 5-point scoring rubric, not a 6-point scale like the one used to benchmark human scorers and ChatGPT. We corrected for this difference by calculating two metrics that enable a direct, apples-to-apples comparison.

Cohen’s Kappa: This statistic adjusts for the rubric scale to measure true agreement beyond chance on a 0 to 1 scale, with scores closer to 1 indicating stronger agreement. Put more simply, Cohen's Kappa tells you how good your scoring system really is at producing an exact match to a certain standard after adjusting for random chance and the scale of your rubric. EnlightenAI scored a Kappa of 0.33, extremely close to the 0.36 "fair agreement" benchmark set by well-trained humans. ChatGPT achieves a Cohen’s Kappa of between 0.01 and 0.22 depending on the sample, lagging humans and EnlightenAI by a significant margin. All in all, even two well-calibrated human graders are not great at producing exactly the same score when presented with the same piece work, but EnlightenAI is competitive with even the best human scoring.

Quadratic Weighted Kappa: This goes further, assessing not just if scores match, but how severe discrepancies are when they don’t, again on a 0 to 1 scale with scores closer to 1 signaling stronger consistency. In other words, scores closer to 1 signal that not only is your grading system good at producing exact matches with the established standard, but when you make errors, they're not huge. EnlightenAI's score here was 0.674, showing that even when it doesn’t hit the mark exactly, it’s not far off. To put this in perspective, state-of-the-art automated essay scoring (AES) systems, which are narrowly trained on hundreds of samples of work for highly specific tasks, typically score between 0.57 and 0.8. AES systems are the best in the world at producing reliable scores, though they don't generate feedback and are notoriously narrow, since they are built for highly specific tasks. By contrast, EnlightenAI is made to be flexible and adapt to just about any written task. When viewing summary statistics of EnlightenAI's predicted scores compared to those that DREAM educators actually gave, it's easy to see why EnlightenAI rated so well across different measures of reliability.